Sweden is a leader in terms of digitalisation, but poorer municipalities struggle to find the resources to develop digital forms of politics. Image: Stockholm by Peter Tandlund (Flickr CC BY-NC-ND 2.0)

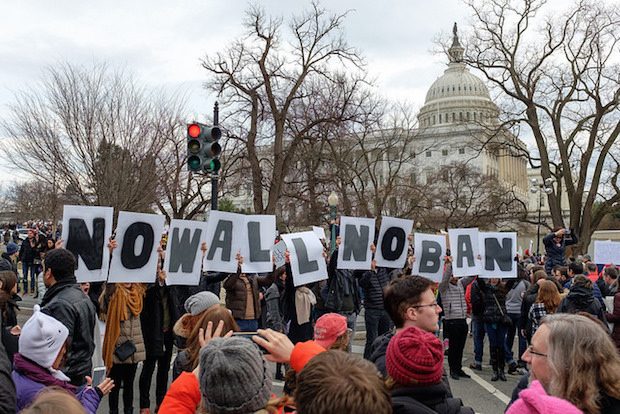

While much of the modern political process is now carried out digitally, ICTs have yet to bring democracies to their full utopian ideal. The drivers of involvement in digital politics from an individual perspective are well studied, but less attention has been paid to the supply-side of online engagement in politics. In his Policy & Internet article “Inequality in Local Digital Politics: How Different Preconditions for Citizen Engagement Can Be Explained,” Gustav Lidén examines the supply of channels for digital politics distributed by Swedish municipalities, in order to understand the drivers of variation in local online engagement. He finds a positive trajectory for digital politics in Swedish municipalities, but with significant variation between municipalities when it comes to opportunities for engagement in local politics via their websites. These patterns are explained primarily by population size (digital politics is costly, and larger societies are probably better able to carry these costs), but also by economic conditions and education levels. He also find that a lack of policies and unenthusiastic politicians creates poor possibilities for development, verifying previous findings that without citizen demand—and ambitious politicians—successful provision of channels for digital politics will be hard to achieve. We caught up with Gustav to discuss his findings: Ed.: I guess there must be a huge literature (also in development studies) on the interactions between connectivity, education, the economy, and supply and demand for digital government; and what the influencers are in each of these relationships. Not to mention causality. I’m guessing “everything is important, but nothing is clear”—is that fair? And do you think any “general principles” explaining demand and supply of electronic government/democracy could ever be established, if they haven’t already? Gustav: Although the literature in this field is becoming vast the subfield that I am primarily engaged in, that is the conditions for digital policy at the subnational level, has only recently attracted greater numbers of scholars. Even if predictors of these…