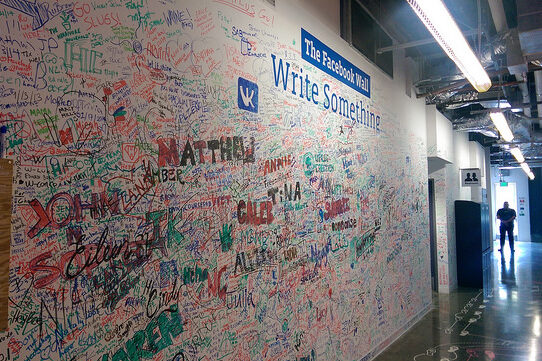

The increased reliance on Internet technology impacts human rights. Image: Bruno Cordioli (Flickr CC BY 2.0).

The Internet has drastically reshaped communication practices across the globe, including many aspects of modern life. This increased reliance on Internet technology also impacts human rights. The United Nations Human Rights Council has reaffirmed many times (most recently in a 2016 resolution) that “the same rights that people have offline must also be protected online”. However, only limited guidance is given by international human rights monitoring bodies and courts on how to apply human rights law to the design and use of Internet technology, especially when developed by non-state actors. And while the Internet can certainly facilitate the exercise and fulfilment of human rights, it is also conducive to human rights violations, with many Internet organisations and companies currently grappling with their responsibilities in this area. To help understand how digital technology can support the exercise of human rights, we—Corinne Cath, Ben Zevenbergen, and Christiaan van Veen—organised a workshop at the 2017 Citizen Lab Summer Institute in Toronto, on ‘Coding Human Rights Law’. By gathering together academics, technologists, human rights experts, lawyers, government officials, and NGO employees, we hoped to gather experience and scope the field to: 1. Explore the relationship between connected technology and human rights; 2. Understand how this technology can support the exercise of human rights; 3. Identify current bottlenecks for integrating human rights considerations into Internet technology, and; 4. List recommendations to provide guidance to the various stakeholders working on human-rights strengthening technology. In the workshop report “Coding Human Rights Law: Citizen Lab Summer Institute 2017 Workshop Report”, we give an overview of the discussion. We address multiple legal and technical concerns. We consider the legal issues arising from human rights law being state-centric, while most connected technologies are being developed by the private sector. We also discuss the applicability of current international human rights frameworks to debates about new technologies. We cover the technical issues that arise when trying to code for human rights, in…