Category: Governance & Security

All topics

-

How do we encourage greater public inclusion in Internet governance debates?

The Internet is neither purely public nor private, but combines public and private networks, platforms,…

-

Governments Want Citizens to Transact Online: And This Is How to Nudge Them There

Peter John and Toby Blume design and report a randomised control trial that encouraged users…

-

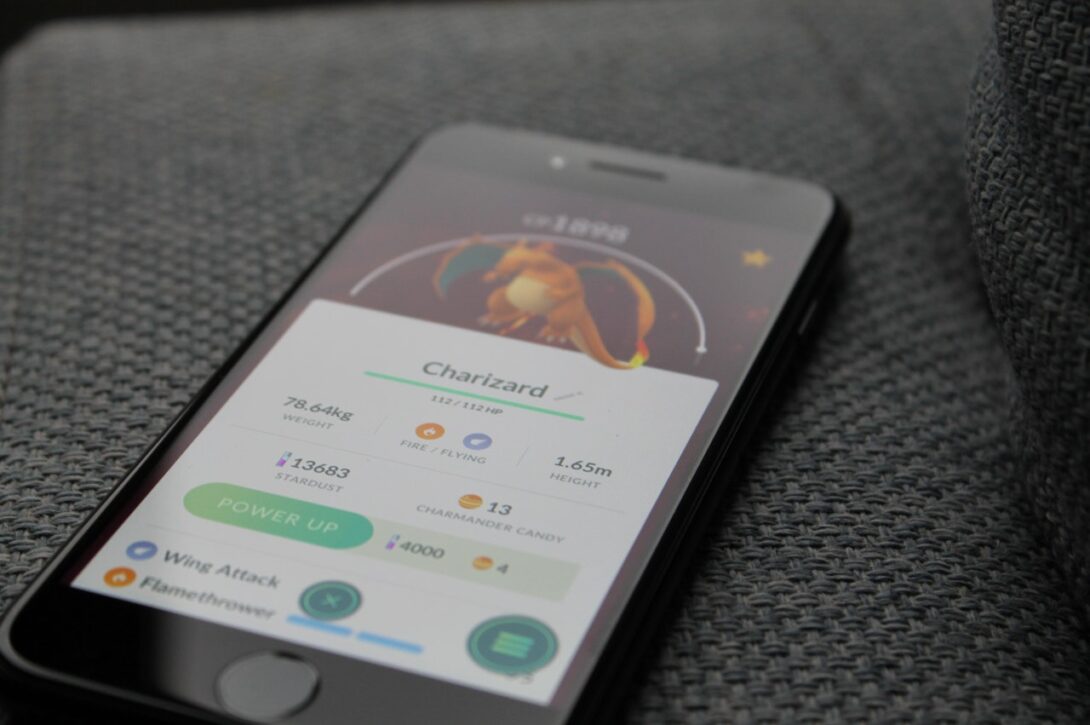

Five Pieces You Should Probably Read On: Reality, Augmented Reality and Ambient Fun

Things you should probably know, and things that deserve to be brought out for another…

-

Could data pay for global development? Introducing data financing for global good

Are there ways in which the data economy could directly finance global causes such as…

-

The blockchain paradox: Why distributed ledger technologies may do little to transform the economy

Applying elementary institutional economics to examine what blockchain technologies really do in terms of economic…

-

Assessing the Ethics and Politics of Policing the Internet for Extremist Material

Exploring the complexities of policing the web for extremist material, and its implications for security,…

-

New Voluntary Code: Guidance for Sharing Data Between Organisations

For data sharing between organisations to be straight forward, there needs to a common understanding…

-

Controlling the crowd? Government and citizen interaction on emergency-response platforms

Government involvement in crowdsourcing efforts can actually be used to control and regulate volunteers from…