All Articles

All topics

-

Does crowdsourcing citizen initiatives affect attitudes towards democracy?

Exploring how involvement in the citizen initiatives affects attitudes towards democracy

-

Do Finland’s digitally crowdsourced laws show a way to resolve democracy’s “legitimacy crisis”?

Discussing the digitally crowdsourced law for same-sex marriage that was passed in Finland and analysing…

-

Assessing crowdsourcing technologies to collect public opinion around an urban renovation project

How do you increase the quality of feedback without placing citizens on different-level playing fields…

-

Crowdsourcing ideas as an emerging form of multistakeholder participation in Internet governance

Assessing the extent to which crowdsourcing represents an emerging opportunity of participation in global public…

-

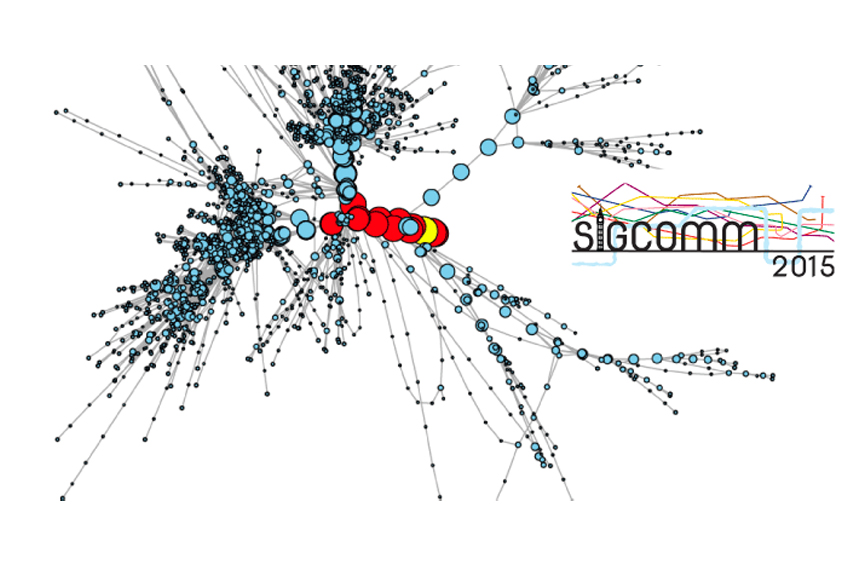

Ethics in Networked Systems Research: ACM SigComm Workshop Report

– in EthicsExperimentation and research on the Internet require ethical scrutiny in order to give useful feedback…

-

Using Wikipedia as PR is a problem, but our lack of a critical eye is worse

That Wikipedia is used for less-than scrupulously neutral purposes shouldn’t surprise us – our lack…

-

Crowdsourcing for public policy and government

The growing interest in crowdsourcing for government and public policy must be understood in the…

-

Current alternatives won’t light up Britain’s broadband blackspots

Satellites, microwaves, radio towers – how many more options must be tried before the government…

-

Should we love Uber and Airbnb or protest against them?

– in EconomicsSome theorists suggest that such platforms are making our world more efficient by natural selection.…

-

Uber and Airbnb make the rules now — but to whose benefit?

Outlining a more nuanced theory of institutional change that suggests that platforms’ effects on society…