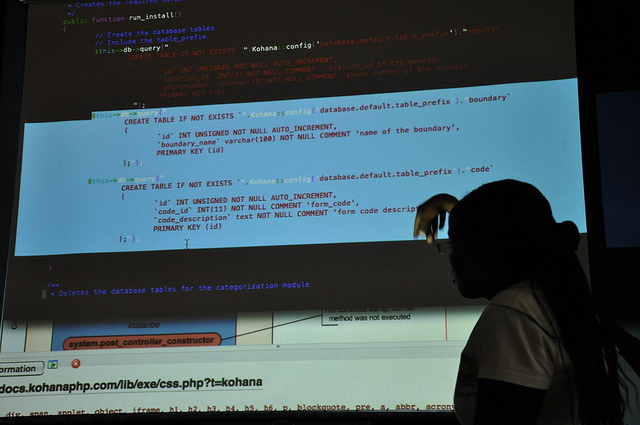

‘Code’ or ‘law’? Image from an Ushahidi development meetup by afropicmusing.

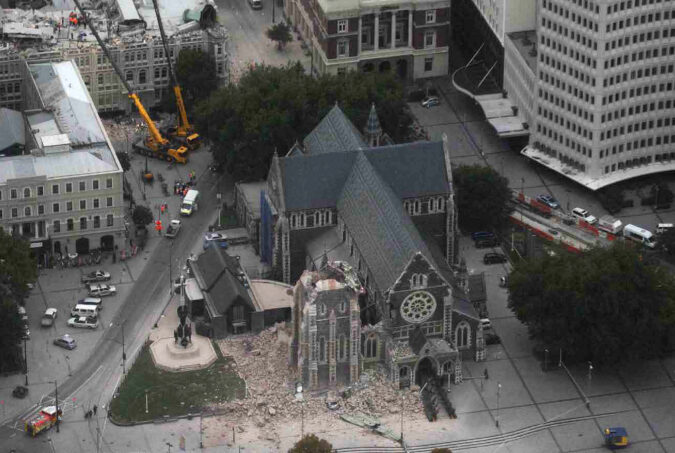

In ‘Code and Other Laws of Cyberspace’, Lawrence Lessig (2006) writes that computer code (or what he calls ‘West Coast code’) can have the same regulatory effect as the laws and legal code developed in Washington D.C., so-called ‘East Coast code’. Computer code impacts on a person’s behaviour by virtue of its essentially restrictive architecture: on some websites you must enter a password before you gain access, in other places you can enter unidentified. The problem with computer code, Lessig argues, is that it is invisible, and that it makes it easy to regulate people’s behaviour directly and often without recourse. For example, fair use provisions in US copyright law enable certain uses of copyrighted works, such as copying for research or teaching purposes. However the architecture of many online publishing systems heavily regulates what one can do with an e-book: how many times it can be transferred to another device, how many times it can be printed, whether it can be moved to a different format—activities that have been unregulated until now, or that are enabled by the law but effectively ‘closed off’ by code. In this case code works to reshape behaviour, upsetting the balance between the rights of copyright holders and the rights of the public to access works to support values like education and innovation. Working as an ethnographic researcher for Ushahidi, the non-profit technology company that makes tools for people to crowdsource crisis information, has made me acutely aware of the many ways in which ‘code’ can become ‘law’. During my time at Ushahidi, I studied the practices that people were using to verify reports by people affected by a variety of events—from earthquakes to elections, from floods to bomb blasts. I then compared these processes with those followed by Wikipedians when editing articles about breaking news events. In order to understand how to best design architecture to enable particular behaviour, it becomes important to…