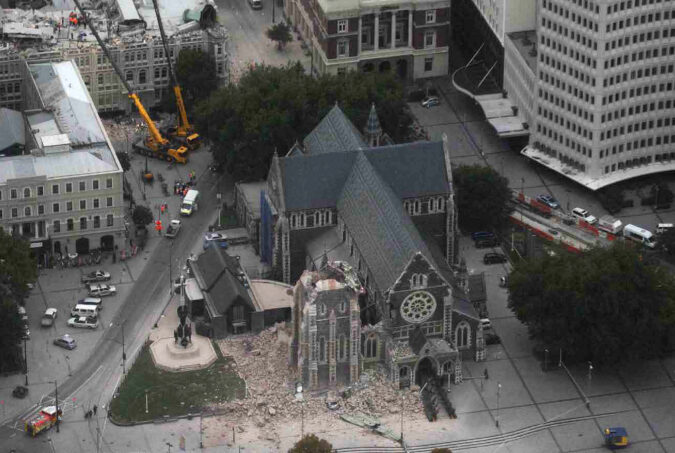

On 12 August 2015, a series of explosions killed 173 people and injured hundreds at a container storage station at the Port of Tianjin. Tianjin Port by Matthias Catón (Flickr CC BY-NC-ND 2.0).

As social media become increasingly important as a source of news and information for citizens, there is a growing concern over the impacts of social media platforms on information quality—as evidenced by the furore over the impact of “fake news”. Driven in part by the apparently substantial impact of social media on the outcomes of Brexit and the US Presidential election, various attempts have been made to hold social media platforms to account for presiding over misinformation, with recent efforts to improve fact-checking. There is a large and growing body of research examining rumour management on social media platforms. However, most of these studies treat it as a technical matter, and little attention has been paid to the social and political aspects of rumour. In their Policy & Internet article “How Social Media Construct ‘Truth’ Around Crisis Events: Weibo’s Rumor Management Strategies after the 2015 Tianjin Blasts”, Jing Zeng, Chung-hong Chan and King-wa Fu examine the content moderation strategies of Sina Weibo, China’s largest microblogging platform, in regulating discussion of rumours following the 2015 Tianjin blasts. Studying rumour communication in relation to the manipulation of social media platforms is particularly important in the context of China. In China, Internet companies are licensed by the state, and their businesses must therefore be compliant with Chinese law and collaborate with the government in monitoring and censoring politically sensitive topics. Given most Chinese citizens rely heavily on Chinese social media services as alternative information sources or as grassroots “truth”, the anti-rumour policies have raised widespread concern over the implications for China’s online sphere. As there is virtually no transparency in rumour management on Chinese social media, it is an important task for researchers to investigate how Internet platforms engage with rumour content and any associated impact on public discussion. We caught up with the authors to discuss their findings: Ed.: “Fake news” is currently a very hot issue, with Twitter and Facebook both…