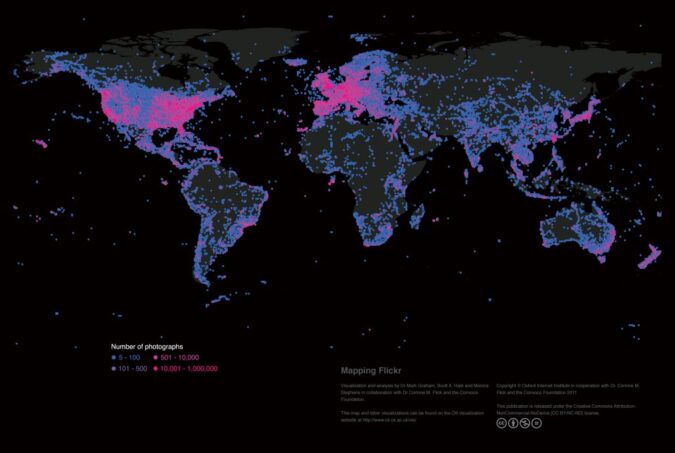

Editors from all over the world have played some part in writing about Egypt; in fact, only 13% of all edits actually originate in the country (38% are from the US). More: Who edits Wikipedia? by Mark Graham. Ed: In basic terms, what patterns of ‘information geography’ are you seeing in the region? Mark: The first pattern that we see is that the Middle East and North Africa are relatively under-represented in Wikipedia. Even after accounting for factors like population, Internet access, and literacy, we still see less contact than would be expected. Second, of the content that exists, a lot of it is in European and French rather than in Arabic (or Farsi or Hebrew). In other words, there is even less in local languages. And finally, if we look at contributions (or edits), not only do we also see a relatively small number of edits originating in the region, but many of those edits are being used to write about other parts of the word rather than their own region. What this broadly seems to suggest is that the participatory potentials of Wikipedia aren’t yet being harnessed in order to even out the differences between the world’s informational cores and peripheries. Ed: How closely do these online patterns in representation correlate with regional (offline) patterns in income, education, language, access to technology (etc.) Can you map one to the other? Mark: Population and broadband availability alone explain a lot of the variance that we see. Other factors like income and education also play a role, but it is population and broadband that have the greatest explanatory power here. Interestingly, it is most countries in the MENA region that fail to fit well to those predictors. Ed: How much do you think these patterns result from the systematic imposition of a particular view point—such as official editorial policies—as opposed to the (emergent) outcome of lots of users and editors…

The Middle East and North Africa are relatively under-represented in Wikipedia. Even after accounting for factors like population, Internet access, and literacy, we still see less contact than would be expected.